Research

HOME > Research > Research Outputs

Research Outputs

Sensor Recognition Technology

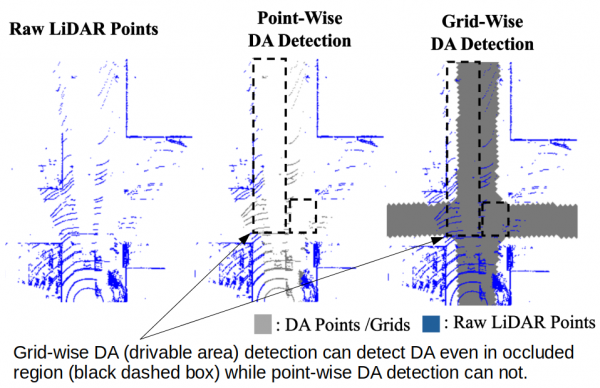

Development of Argoverse-grid Dataset and Grid-DATrNet Model for LiDAR-based Drivable Area Detection

- Constructed the Argoverse-grid dataset, a grid-based drivable area (DA) detection dataset derived from Argoverse 1, containing over 20K frames with fine-grained BEV labels.

- Proposed Grid-DATrNet, the first transformer-based grid DA detection model.

- Achieved state-of-the-art performance with Grid-DATrNet, reaching 93.28% accuracy and an 83.28% F1-score.

- Demonstrated superior performance in occluded and unmeasured areas by leveraging global attention, unlike CNN-based models.

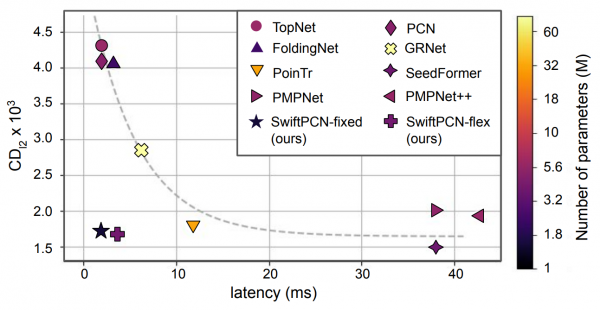

SwiftPCN: Development of a Fast, Implementation-Efficient, and Accurate Point Cloud Completion Network with Flexible Output Resolution

- Existing point cloud completion networks (PCCNs) have achieved remarkable completion accuracy but face limitations in terms of inference speed and memory consumption. Additionally, their fixed output resolution often leads to mismatched point densities when input scans vary in resolution.

- SwiftPCN is a novel PCCN consisting of two new networks: (1) SwiftPCN-fixed and (2) SwiftPCN-flex. It provides fast and accurate completion performance while supporting flexible output resolutions.

- SwiftPCN-fixed achieves an excellent balance between completion accuracy, inference latency, and the number of parameters compared to existing networks.

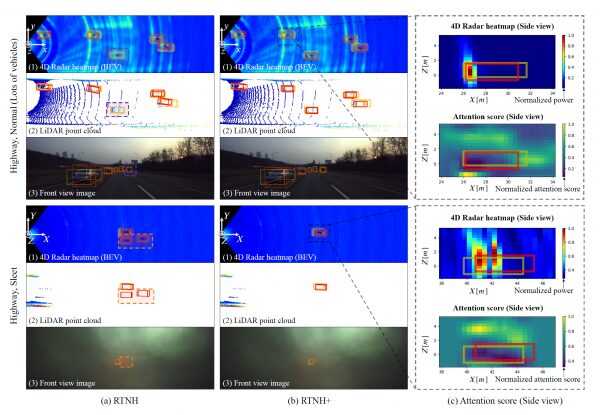

RTNH+: Development of a Preprocessing and VE-based Network for 4D Radar Object Detection

- Proposed RTNH+, an improved version of RTNH, significantly enhancing object detection performance using 4D radar measurements.

- The Two Level Processing(TLP) algorithm generates two different filtered outputs from the same 4D radar data, enriching data representation.

- The vertical encoding (VE) algorithm effectively encodes the vertical object features from the TLP outputs.

- RTNH+ achieves a performance improvement of 10.14% in AP(IoU=0.3, 3D) and 16.12% in AP(IoU=0.5, 3D) compared to RTNH.

Percision Positioning Technology

Stepped-Frequency Binary Offset Carrier Modulation for Global Navigation Satellite Systems

- A novel modulation technique, Stepped-Frequency Binary Offset Carrier (SFBOC), is proposed for GNSS.

- SFBOC modulation varies the subcarrier frequency stepwise within the period of the Pseudo Random Noise (PRN) code, resulting in a sharp autocorrelation function (ACF) output and a flat spectrum over a wide frequency band.

- Compared to conventional GNSS modulations and other time-varying subcarrier frequency (TVSF) modulations, SFBOC demonstrates higher ranging accuracy, lower tracking ambiguity, and superior robustness to noise, interference, and multipath.

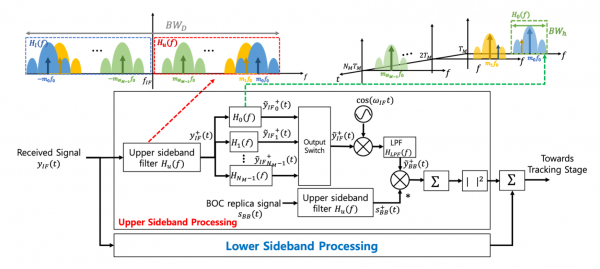

BOC-FPDNet: Development of a First Arrival Path Detection Network for BOC Signals in Multipath Environments

- Binary Offset Carrier (BOC) modulation, used in GNSS, has side-peaks in the autocorrelation function (ACF), leading to positioning errors in multipath channels.

- To address this issue, we propose the BOC First Arrival Path Detection Network (BOC-FPDNet).

-

BOC-FPDNet consists of two stages: Side-peaks Cancellation (SC) and Multipath Time Delay Estimation (MDE).

- SC is a deep learning-based method that eliminates side-peaks without additional computational load or noise increase.

- MDE uses Multi-head Attention to extract ACF output features and employs positive and negative sample detectors to estimate time delays.

End-to-end Autonomous Driving

End-to-End Autonomous System

- In the end-to-end method, unlike the modular method in which the role of each module is determined, judgment is performed through all input data.

Transfer from Simulation to Real(Sim2Real)

- It is very difficult to obtain training data in the real road environment to develop driving policies because of the high probability of accidents. To overcome this limitation of data collection, we study Sim2Real technology that utilizes the simulation environment, minimizes the reality gap between the simulation and the real environment, and achieves efficient policy transfer from the simulation to the real environment.

RL-based End-to-end Autonomous Driving

Architecture of RL-SESR(Segmented Encoder for Sim2Real)

RL-SESR Field Test

- Reinforcement learning (RL) is an artificial intelligence learning technique that allows the vehicle defined in the driving environment to recognize the current state and select the action that maximizes the reward among the selectable actions (Throttle, Steering, etc.).

- End-to-end autonomous driving based on reinforcement learning can learn the ability to cope with various undefined driving situations by itself, unlike existing modular autonomous driving.

- After segmenting the high-dimensional camera image, state generality is improved by compressing it into low-dimensional space information through VAE (Variational AutoEncoder).

IL-based End-to-end Autonomous Driving

Architecture of Imitation Learning-based E2E Autonomous Driving

IL-based E2E Driving Field Test

- Imitation Learning (IL) is an artificial intelligence learning technique that allows human drivers to imitate their driving policies through driving data acquired.

- Since imitation learning learns a policy based on data, its performance is inevitably deteriorated for data that has not been experienced, and there is a limitation that consistent behavior must always be output for the same state, unlike human drivers.

- In order to solve this limitation of imitation learning, a hybrid (RL+IL) learning technique with characteristics of imitation learning and reinforcement learning is being studied.

Sensor perception technology

Lidar point cloud lane perception dataset construction, AI-based lane and object perception

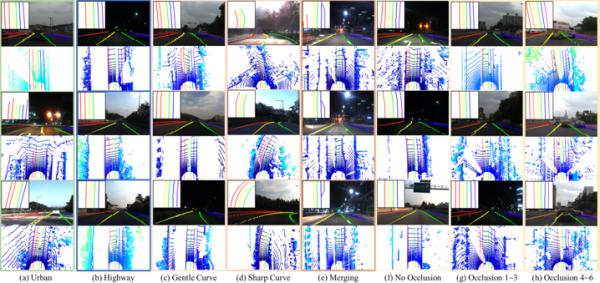

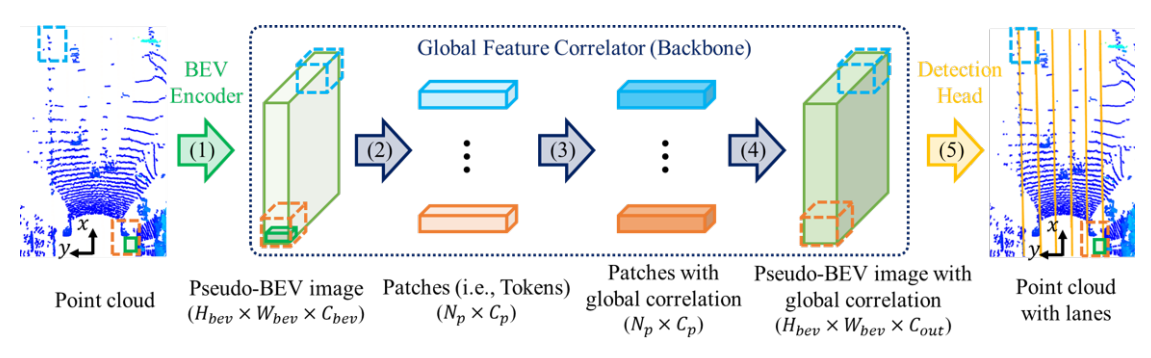

- AVE lab builds (1) the world's first large-capacity Lidar lane detection dataset (K-Lane), and (2) artificial intelligence that reflects lidar lane characteristics (Lidar Lane Detection Network utilizing Global Feature Correlator; hereafter referred to as LLDN-GFC) developed to achieve the world's best lane detection performance and published a thesis at the world's top computer vision conference.

- K-Lane provides more than 15k point clouds and front camera images acquired in various urban and highway environments (e.g., day, night, curve, lane cover, etc.), and provides a development framework (neural network learning, evaluation, visualization, labeling) through lab Github for the advancement of Lidar Lane detection neural network research.

- In addition, the LLDN-GFC proposed in this paper improves performance (F1-Score) by 6.1% compared to conventional convolutional neural network (CNN)-based neural networks, and improves performance by 10.2% especially when multiple lanes are covered, enabling safe autonomous driving even in crowded vehicles.

K-Lane Dataset Examples

Labeling program

Lidar lane detection inference

Lidar lane detection framework

4D Radar object perception technology

- The 4D radar is the only cognitive sensor for autonomous driving that can (1) operate robustly against bad weather (e.g., heavy snow, heavy rain, fog, etc.), and (2) measure relative speed information of objects and 3D spatial information of surrounding environments.

- AVElab has built the world's first 4D radar mass dataset and benchmarks (K-Radar) for autonomous driving in bad weather.

- K-Radar (1) provides 35k of 4D radar data precisely labeled with 3D bounding boxes and speed information for the only major objects on the road, (2) acquired in extreme weather conditions (e.g., snowfall of 4cm or more per hour) and various road environments (e.g., highways, national roads, urban areas, etc.), and (3) provides precisely synchronized camera, lidar, RTK-GPS, and IMU data through lab Github.

- AVE lab also developed encoding technology specializing in 4D radar, showing that stable 3D object detection is possible even in bad weather situations where the world's best 3D object detection neural network is achieved and the measurements of the camera and lidar disappear.

4D Radar object detection inference result

Sensor measurement in heavy snow condition

4D Radar labeling process

4D Radar object detection framework

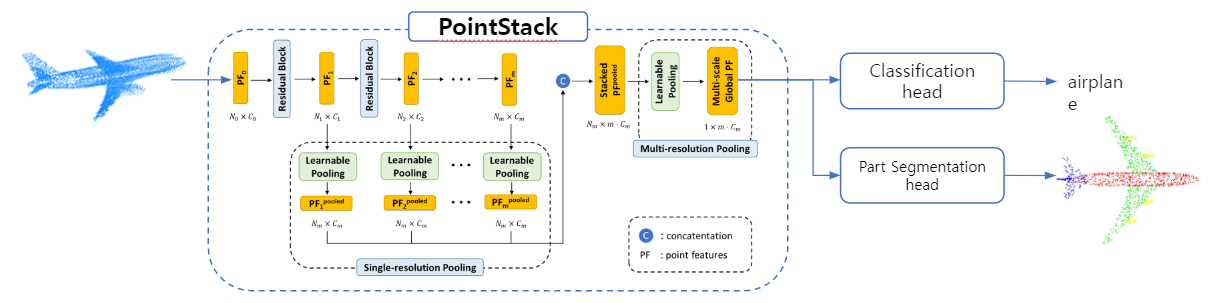

PointCloud feature extraction technology

- PointStack, developed by AVElab, is a new type of point cloud feature learning network that uses Multi-resolution feature and Learnable pooling.

- Below are the components of PointStack newly designed for feature learning tasks in AVElab.

- Multi-resolution feature : Minimize the loss of detailed information in the downsampling process.

- Learnable Pooling : Minimizes the loss of point feature information other than the maximum value during feature pooling.

- PointStack currently exhibits SOTA performance and can be used as a feature extraction backbone in a wide variety of tasks such as feature classification, partial semantic segmentation, and object detection.

Architecture of PointStack. As a general feature learning backbone, PointStack can be used for various tasks such as classification and segmentation

Part-segmentation visualization of ground truths (G.T.) and predictions (Pred)

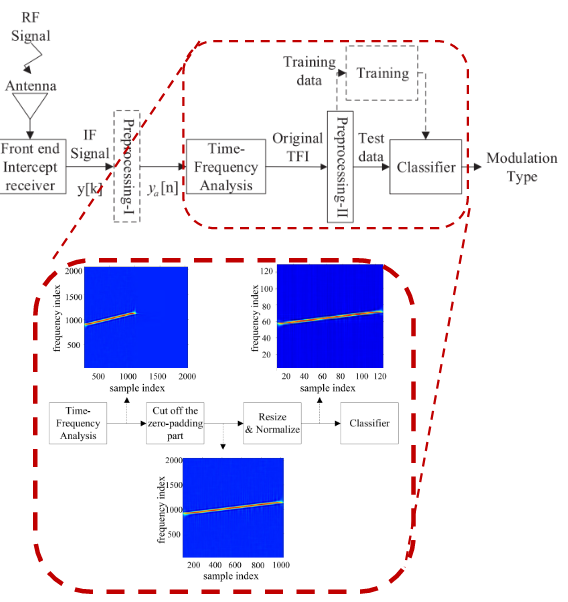

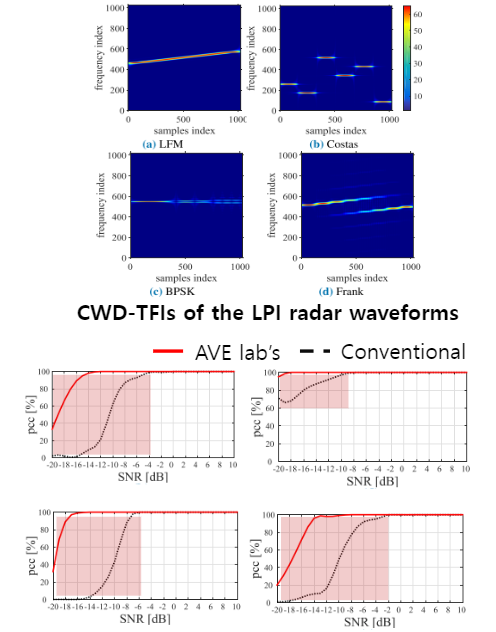

LPI RADAR signal detection technology

- We developed a technology that can recognize and classify Pulse waveform (PW) and Continuous waveform (CW) using artificial intelligence (CNN) technology.

- The developed LPI radar signal recognition technology can distinguish a total of 12 waveforms (four existing ones and eight modulating waveforms) and showed robust performance against noise effects.

AVE lab’s LPI waveform recognition system

Performance Comparison

Advanced Positioning technology

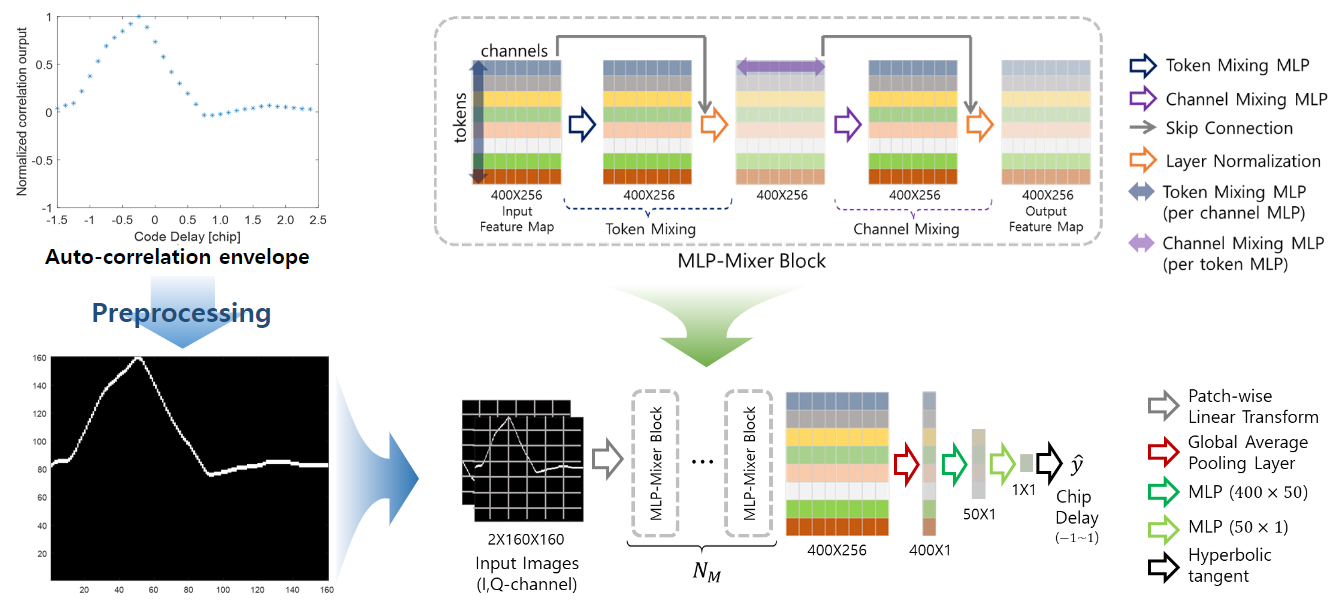

AI-based Multipath mitigation

Input Image for in-phase

- In order to mitigate the influence of multipath channels occurring in urban areas, the first reaching GPS signal detection technology was developed using artificial intelligence technology.

- It has much more accurate detection performance and lower calculation than the existing super-resolution-based initial arrival signal detection technology.

- In order to select the optimal network, benchmarking was performed with various artificial intelligence networks, and practical research results were achieved by verifying them with actual signals.

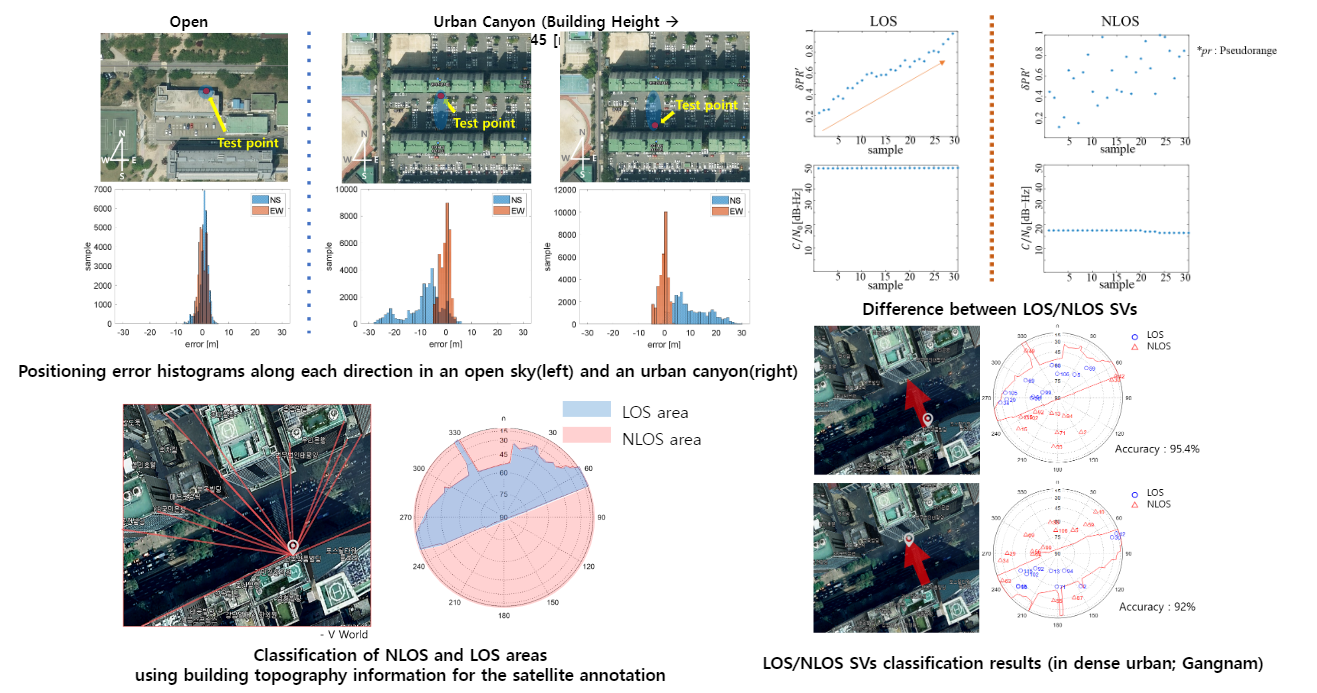

NLOS Satellite classification

- AVE LAB succeeded in classifying NLOS satellites, which cause large positioning errors in urban areas, using artificial intelligence (RNN) (approximately 90% accuracy). The technique was applied to actual satellite receivers to reduce positioning errors by 50%.

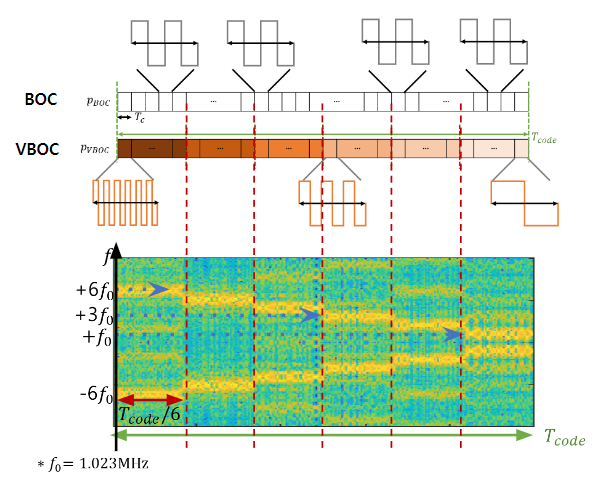

Next-generation GNSS signal modulation

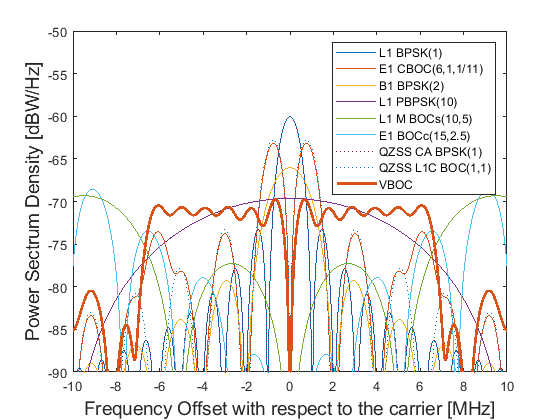

Short Time Fourier Transform of VBOC(6:-1:1,1)

PSDs of VBOC and conventional GNSS modulations

ACF envelopes of VBOC and

conventional GNSS modulations

- AVELAB has developed a modulation technique with low interference between GNSS systems, high distance measurement accuracy, and robustness to multipath and noise channels that next-generation GNSS signals must have, targeting Korean GPS (KPS) signals.

- By combining the BOC modulation technique used in the existing GNSS with SFCW, the structure of the modulation is simple and very excellent in terms of performance.

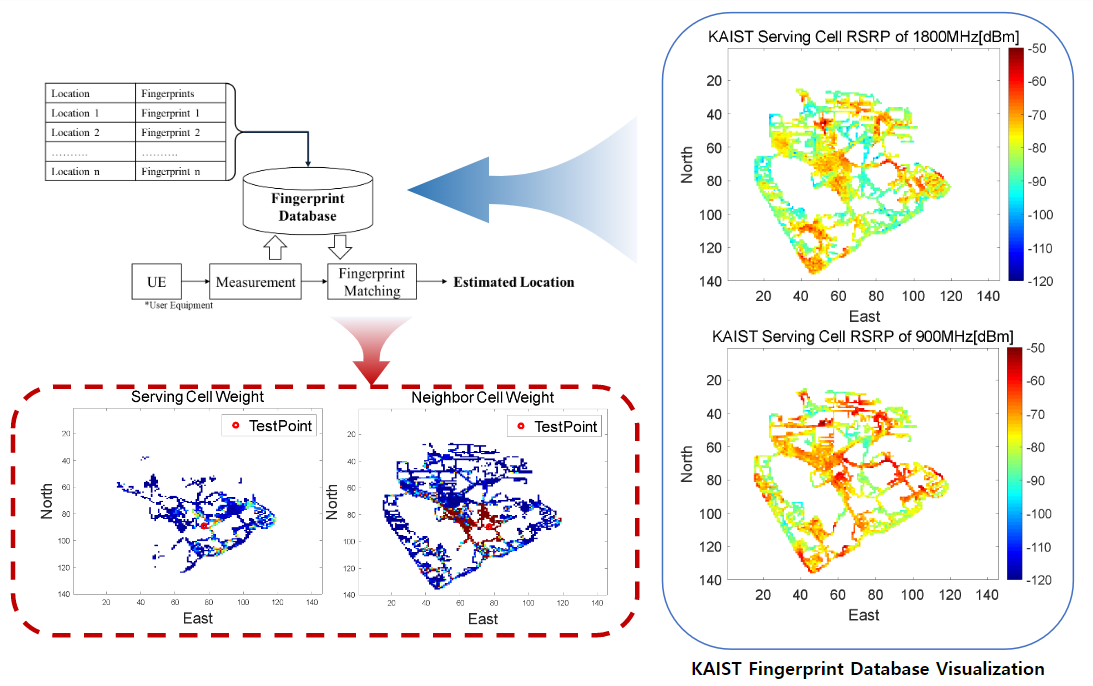

Cellular fingerprint

- In areas where GPS positioning errors are large or impossible to position, such as dense urban areas, AVELAB developed cellular finger printing technology using signal data information from cellular base stations to enable high-accuracy positioning.

- Field tests were conducted at KAIST's main campus (Daejeon) and actual urban areas (Jongro; Gwanghwamun to Insa-dong), and positioning performance of less than 30m error was achieved in urban areas without GPS positioning.

- Currently, KT is commercializing cellular finger printing technology developed by AVELAB.